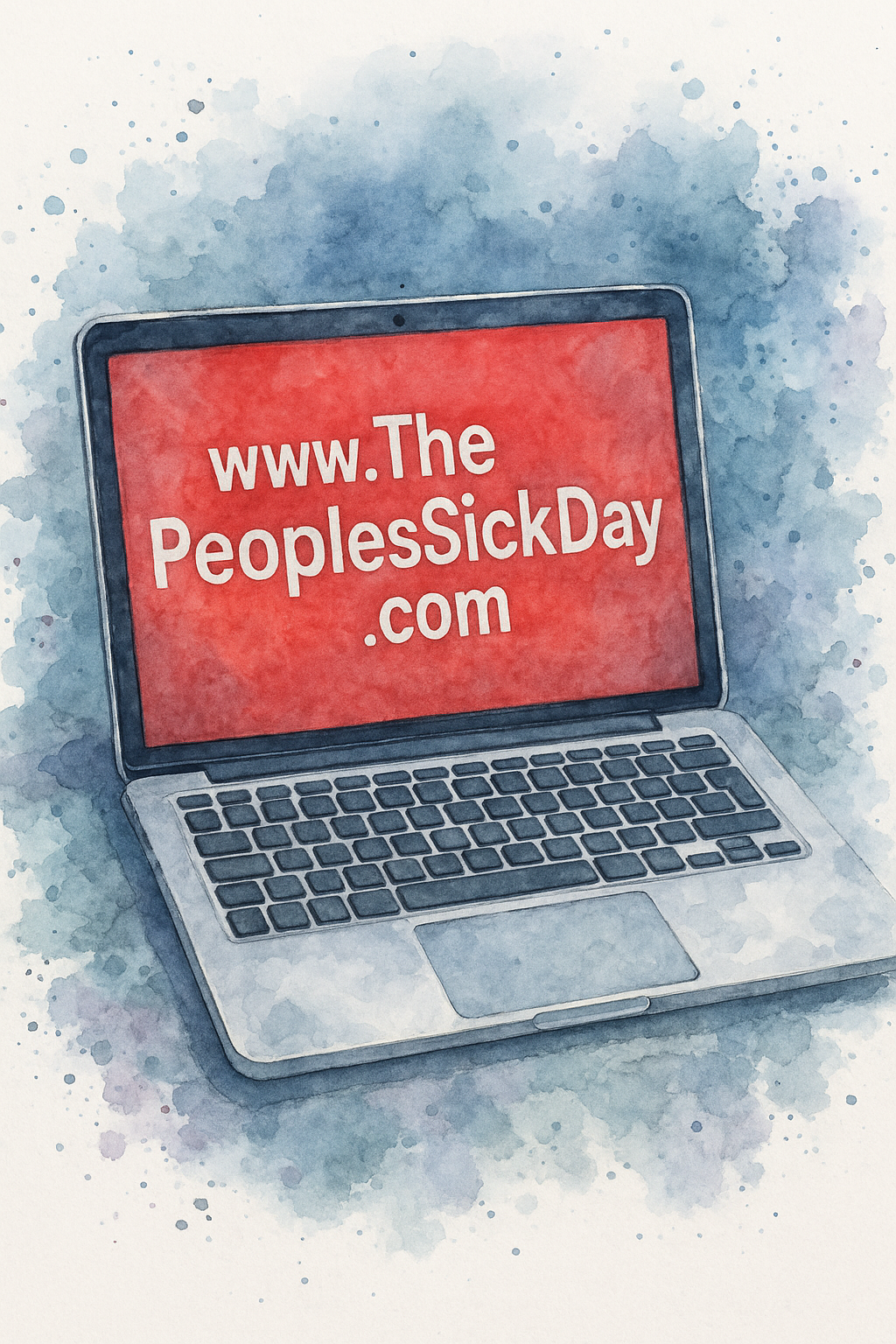

Let me say this upfront:

We know.

We know AI is a hot mess of ethical nightmares, corporate greed, mass displacement, plagiarism masquerading as progress, surveillance capitalism, and unregulated techno-colonialism wrapped in a friendly chatbot.

We know. We’ve read the essays. We’ve written some of them.

And yes, we still use it.

Why? Because the same system that poisoned the water also built the pipes. And sometimes—you still need to drink.

Let’s start with the obvious accusations, shall we?

“You’re using tools built by billion-dollar corporations that exploit labor and steal creative work.”

Correct.

And the phone you’re holding was made in factories that treat workers like malfunctioning robots. The streaming platform you’re watching is eating indie film alive. The grocery chain where you buy organic kale is destroying local farms.

This isn’t a defense. This is an indictment.

We are all compromised. The goal is not purity. The goal is transparency, resistance, and strategic use of tools that can—sometimes—help us survive inside the machine without becoming it.

“AI will destroy jobs, creativity, and reality itself.”

First of all: same.

Second: yes—we’re seeing it already. Creative industries are being gutted, educational systems are scrambling, and the line between human and algorithmic output is blurring like a chalk outline in the rain.

But here’s the thing:

We’re not using AI to replace humans. We’re using it to outmaneuver burnout, bureaucracy, and the prison of perfection.

We’re using it to sketch, prototype, brainstorm at the speed of ADHD panic, and build scaffolding faster than our trauma wants us to.

If your AI use is replacing a person’s voice? You’re doing it wrong.

If your AI use is amplifying a human’s voice, especially a neurodivergent one that the system usually drowns out? Then we’re having a different conversation.

“AI can’t be ethical. It’s built on stolen data and invisible labor.”

Absolutely. And until OpenAI, Google, Meta, and the rest of the techno-oligarchy get dragged through regulatory fire, every AI model is ethically haunted.

We do not pretend otherwise. We do not handwave the horror.

We also don’t believe in letting perfect be the enemy of possible.

What we do believe in:

- Radical transparency (we’ll tell you when we used AI and how)

- Human-first collaboration (AI as tool, not oracle)

- Open-source resistance where possible

- Calling out bad behavior from inside the machine

“But doesn’t using AI help normalize the very systems we’re critiquing?”

Yes.

And breathing normalizes capitalism. So does eating, making art, and being visible on the internet.

Survival is not complicity.

Complicity is silence. Complicity is pretending tech is neutral. Complicity is letting AI replace your voice because it’s faster.

We use AI loudly, messily, ethically chaotically.

We use it like a crow uses a shiny spoon—to crack open locked systems, not to dine with the king.

Why We Actually Use AI (The Real Reasons)

- Because ADHD is real, and sometimes a co-writer that never sleeps is the difference between “idea graveyard” and “finished draft.”

- Because trauma kills momentum, and sometimes momentum is life.

- Because AI can help structure ideas at 2AM when your brain is spiraling.

- Because we’re not trying to be pure. We’re trying to be useful. And sometimes, that means building with broken tools—while shouting about how they were broken in the first place.

This isn’t techno-utopianism. This is pragmatic rebellion.

We’re not worshiping the machine.

We’re yelling into it.

We’re building scaffolds from its ribs.

We’re carving messages into its bones.

We’re flipping off the void—and yes, we’re using a robot arm to do it.

If you want a world where AI is used ethically, start by watching how it’s used by the people it was never built for: the weirdos, the neurodivergent thinkers, the queer theorists, the burned-out therapists, the cults-that-aren’t-cults.

We didn’t ask for this system.

But we’ll be damned if we don’t use it to burn itself clean.